The 2024 US presidential election revealed AI's dual impact: while no single 'bombshell' AI disinformation event disrupted the election, the technology enabled widespread low-quality disinformation and targeted manipulation through cheapfakes, propaganda, hyperlocal campaigns, and platform-amplified negative content—exposing structural vulnerabilities in America's digital political landscape.

Artificial intelligence is increasingly reshaping election campaigns, offering tools for both legitimate strategies and disinformation. This publication explores the impact of AI on democratic processes, highlighting its role in shaping public perception and influencing political dynamics.

Read the full analysis in German here:

Explore the Data

AI-Incidents

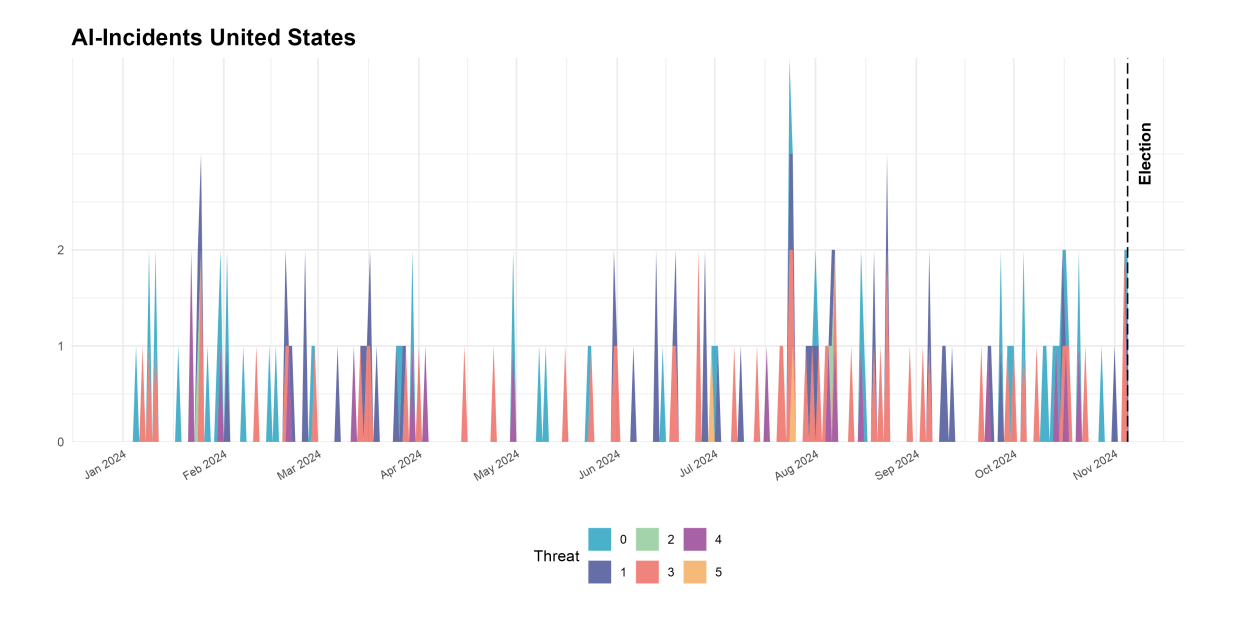

The presidential elections in the United States were held on November 5, 2024. The analysis covered the period from January 1 to November 11, 2024. A total of 46,022 news articles were analyzed to identify AI-related incidents, whereby 146 incidents were recorded. It is important to note that identified incidents were often reported by multiple news outlets, leading to extensive coverage. However, each incident was included only once in the listing.

Trends:

- A significant volume of AI-generated content circulated in connection with the US presidential election

-

- The Prevalence of Low-Quality AI-Generated Content

-

Most of the AI-generated visual content that have been employed in the US presidential campaign were not sophisticated deepfakes but rather cheapfakes—synthetic media of poor quality that users can easily identify as AI-generated.

- AI Propaganda

-

Its ability to rapidly produce persuasive and tailored content has enabled both political candidates and their supporters to dominate political discourse. For example, AI-generated false claims, such as Taylor Swift endorsing Donald Trump’s candidacy or Kamala Harris supporting communism, have been strategically crafted to influence public perception.

- AI fundamentally changed elections and political campaigning

-

The integration of AI into political campaigning has revolutionized traditional processes by automating various aspects of election strategies and enabling the rapid production and dissemination of content within the digital landscape (Muñoz, 2024).

- Polarization increases intentional spread of disinformation

-

The growing affective polarization in the United States has become increasingly evident, manifesting not only in the fragmentation of the media landscape but also in the dynamics of political information exchange within the digital sphere. In this environment when individuals are aware that political information is factually incorrect, they often choose to believe and disseminate it to bolster their preferred candidate (Litrell et al., 2023).

- Deepfakes and Cheapfakes used to discredit the political opponent

-

- Liars Dividend

-

This phenomenon involves authentic images being discredited—whether by political opponents or the public—as AI-generated, even when they are not.

Proxy Variables:

- Trust in News

- Source of News

- Social Media Usage

- Trust in Media and Government

Vulnerabilities:

- Hyperlocal Disinformation campaigns

-

Strategic voter manipulation, based on AI generated Disinformation campaigns specifically targeting the local level of politics are a significant risk. A result of the continuous decline of local journalism and the lack of resources to debunk. As a result, fact-checking efforts are often left to the candidates themselves, relying primarily on their own resources (Muñoz, 2024).

- Challenges in Detecting AI-Generated Content

-

AI-generated content benefits from being produced quickly and at a low cost, and as technology advances, detecting such content is becoming increasingly difficult. The ease of access to AI tools has led to their widespread use, making them a prevalent force in digital communication. Research shows that social media users often struggle to identify AI-generated content, largely due to their reliance on outdated strategies. As AI systems become more sophisticated, traditional detection methods are proving less effective, further complicating efforts to discern authentic content from artificially generated material (Frances-Wright et al., 2024).

- Targeted language-based disinformation campaigns

-

Another vulnerability exists in the form of targeted, language-based disinformation campaigns aimed at specific population groups in the U.S., with the Spanish-speaking minority being particularly vulnerable to such manipulation.

- Threat to Political Trust: Undermining Confidence in Leadership

-

Particularly striking are deepfakes that personally attack politicians and have the potential to undermine voters' trust in political leadership. This is particularly evident in the case of President Biden's deepfakes: political opponents shared audio and video deepfakes that reinforced existing concerns about the president's physical and mental health and helped undermine confidence in his person and mental state (Muñoz, 2024).

- Highly personalized political campaigning fueling fragmentation and polarization

-

Personal data is widely available for purchase in the US, in cooperation with AI this allows political campaigns to create tailored political messages for voters. This trend can increase the fragmentation of the public, through its ability reinforce people's political opinions and beliefs. Remaining in one’s ne bubble of political opinions, in the long run, risks the vulnerability of polarization (Muñoz, 2024).

- Blurring the Line Between Parody and Harmful Misinformation

-

The AI-generated video posted by Elon Musk on Twitter, depicting him and Donald Trump dancing—a scenario that is clearly fictional and easily recognizable as AI-generated, the content was not appropriately labeled, signaling its artificial nature. However, this raises the question: where do we draw the line between harmless parody and content that poses a real threat? As AI-generated media becomes increasingly sophisticated, even clear labels may not be enough to prevent the potential for confusion or manipulation, particularly when content may appear clearly fictional or humorous, its potential for widespread sharing and distortion can lead to confusion or manipulation.

- AI-generated content not removed by platforms

-

Social media platforms have been slow to remove large-scale AI-driven disinformation. Furthermore, it has been found that AI-generated content is often not labeled as such, making it difficult for users to identify and critically evaluate it. As the quality of AI-generated content continues to improve, the vulnerability to manipulation increases, posing significant challenges to user awareness and the integrity of online discourse.