In France's 2024 elections (EU Parliament, national parliamentary elections), AI emerged as a significant force across multiple dimensions: from external interference attempts and candidate manipulation to parody and voter profiling—with the country's low trust in media and institutions, combined with uneven platform governance, creating vulnerabilities to AI-driven influence operations.

Share

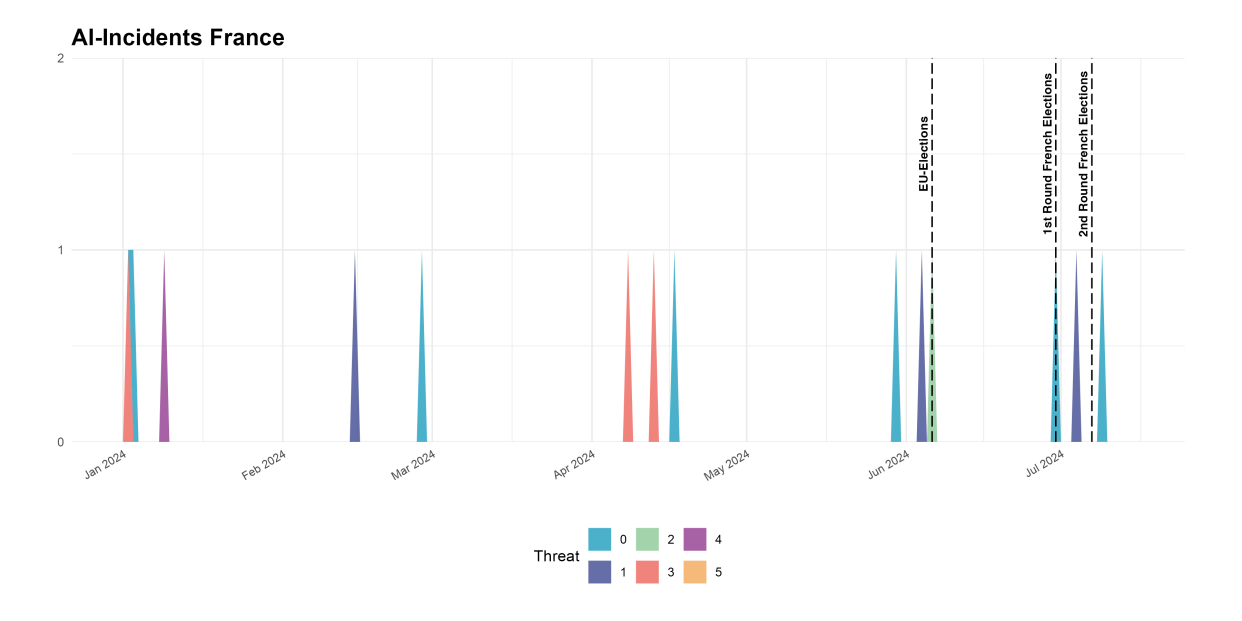

In France two elections took place in the duration of this study. The first was the European elections from the 6th to the 9th of June. After the results of the European election, Emanuel Macron dissolved the National Assembly, resulting in legislative elections in France on the 30th of June and the 7th of July.

The analysis of AI-Incidents in France is based on 482 news articles, resulting in 14 identified AI- cases, in the time frame of 01.01.2024 to 12.07.2024. The analysis was done in collaboration with Nicolas Téterchen from the German Council of Foreign Relations (DGAP).

The PDF Country Factsheet and deeper analyis is coming soon.

Explore the Data

AI-Incidents

Trends:

- AI used by political candidates

-

Some political candidates used AI to either modify or completely generate photos using AI of themselves. The intent here is clear: misleading voters, since there was no labelling.

- Data driven campaigns and increased voter profiling

-

The increase in the use of AI is accompanied by a change in the techniques used to approach voters directly.

- Personal deepfake attacks on popular candidates

-

- AI used to create parodies

-

AI to create parody is frequently used as well and should not be seen as harmless. What appears to be a tasteless joke, can nevertheless have a tangible effect on people’s perceptions.

- Growing awareness from politicians

-

There is a growing awareness, followed by a denunciation by many political players on all sides, of the power of AI over public opinion.

Proxy Variables:

- Trust in News

- Source of News

- Social Media Usage

- Trust in Media and Government

Vulnerabilities:

- Access to deepfakes by the most vulnerable

-

Facebook, widely used to share information and is the social network on which the most fake news circulates10, is particularly popular among the elderly11. Seniors are also the most vulnerable are more than seven times more likely than the 18–29-year-old to interact with fake news online12 (which spreads six times faster than real news13). The increase in AI on social media and the lack of media literacy in the most vulnerable group is a large vulnerability.

- Lack of cooperation between social media and government and weak rules enforcement on AI

-

Little progress has been made in this area despite strong public demand: 84% of French people want tech giants and governments to work together to limit the influence of AI on elections14. The French government's efforts in this direction have even proved counterproductive: a French government campaign was blocked on X because of an anti-fake news law15. Also, even though social media platforms do have their own policies towards requiring AI content to be labelled, several studies have shown that deepfakes are abundant and remain online even without label.

- Lack of Trust in Government and Media

-

- Growing Polarization

-

Polarization makes it easier for hyper personalized content to play into existing biases. Content is less likely to be questioned, and AI can be used to leverage this effect and escalate polarization.

- Exploiting Algorithmic Expertise to Engineer Visibility and Reach on Social Media

-

Algorithmic expertise and an existing digital infrastructure (fake accounts, bots and inauthentic engagement) is leveraged to engineer visibility and reach and manipulate online behavior eventually also mobilizing potential voters.

- AI used for parody and legally labelled that can set narratives and shapes public perceptions

-

- Increase in Use of Chatbots

-

Chatbots can intentionally be used to spread misinformation as several Reuters Institute studies show that there were significant flaws during the European elections.